Dan Farrelly - Inngest

@danfarrelly.com

4 months ago At the end of the day, it's all highly dependent on your use case though, what are you building?

💬 1

♻️ 0

❤️ 0

At the end of the day, it's all highly dependent on your use case though, what are you building?

Lastly, Inngest has great coverage from our local dev server which runs on your machine for fast iteration and cloud observability with metrics and traces. Your code can run on any cloud or infra and it scales to billions of executions a month.

As it's event driven, Inngest can receive events from your application or webhooks to trigger workflows and the events are utilized on recovery tooling like bulk replay if you have a bug or third party API issue that you need to recover from.

www.inngest.com/docs/platfor... Inngest's SDKs all work with a very flexible "step" construct that makes it easy to define workflows in very normal looking code that can also generate new steps at runtime. This makes it ideal for workflows that are dynamic like AI agents or for building user-generated workflow systems.

I'm not an expert on DBOS and it's new-ish, so it will be hard for me to compare, but at it's core, Inngest is event-driven and has a custom-built queue that includes multi-tenant aware flow control functionality that handles complex concurrency, throttling, etc...

www.inngest.com/docs/guides/... Over the last 2 years, many users have asked, “What will Zed 1.0 look like?” We took some time to write up our vision and are ready to share. Check out our roadmap for Zed 1.0 and beyond:

zed.dev/roadmap?b=39 Zed is built from the ground up in Rust, using our own custom GPU-powered UI framework. Owning all layers in the stack allows us to deliver low-latency performance, but comes with the cost of a long beta period as we implement everything from scratch.

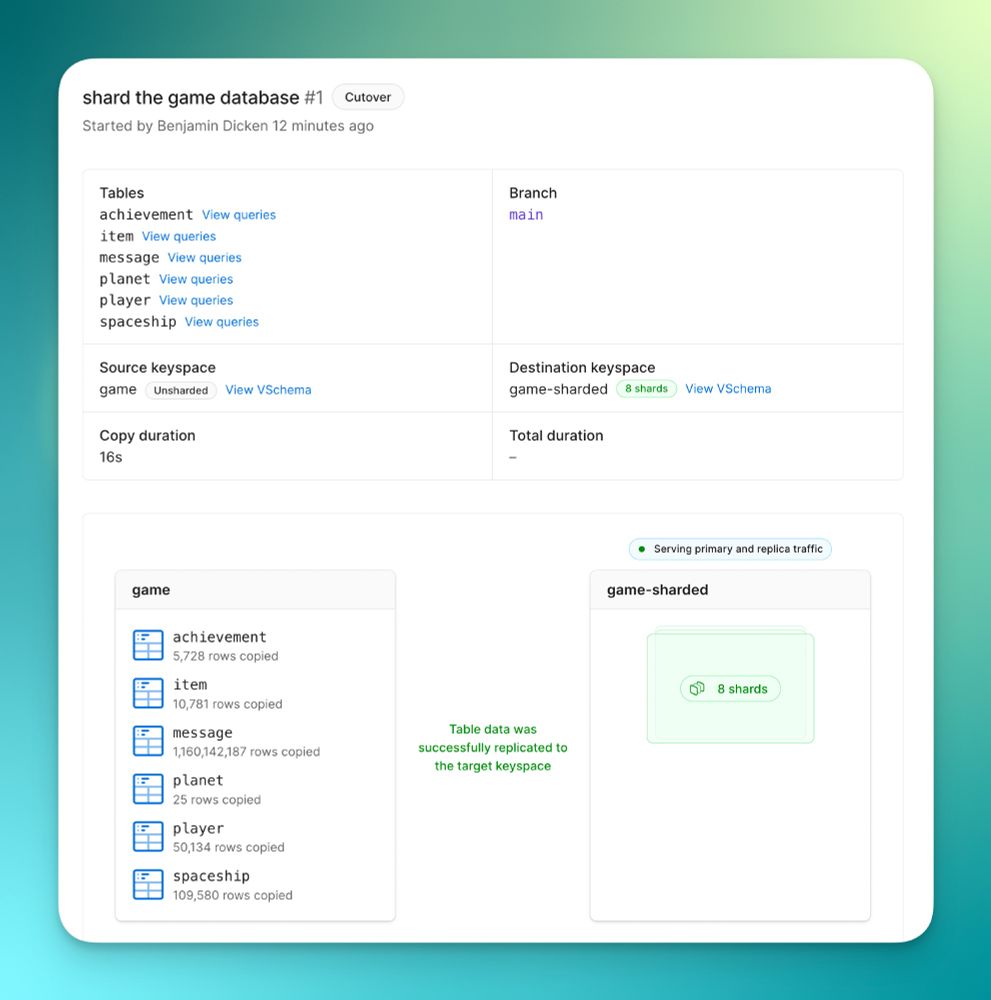

More info about our new sharded → sharded workflow:

planetscale.com/changelog/sh... Use PlanetScale to operate your sharded database. We support: - Workflows for going unsharded → sharded - Scaling your shards to use larger or smaller instances - Workflows for increasing or decreasing the number of shards (new) All with no downtime.

No one's been able to do LLMs on the canvas. If you want to figure it out with us, clone the repo and join us on Discord! discord.gg/8FpbkU42fG My hope is that teams will take this code and run with it. My double hope is that we get a community of developers trading tricks and strategies for generating better outputs, evals to train and tune against, and perhaps even new models to use. The ai module is MIT licensed. It is designed to let developers write their own integrations with model providers like OpenAI, Google, or Anthropic—and to hack the shit out of it to get better outputs. So rather than trying to solve this problem ourselves, we've decided to go in a different direction: let's package our work into a "module" for the SDK and then open it up to other developers to try and crack it. Skip to now, a few months later, and not much has changed. None of the new models seemed natively capable of virtual collaboration on a whiteboard. However we've met lots of teams who think they can make it work... and want help trying. We tried text-based prompts, autocomplete, and conversational prompting. We eventually shipped our results as teach.tldraw.com. It's a blast to play with—a crazy good demo—but clearly not a "good enough" end user experience. We spent a few months pretending like it didn't matter, as if we were guaranteed that new models were coming which could produce perfect results. We built the features and identified the patterns that we would need once those new models dropped. The short answer was, uh, sort of. They could—which was amazing—but even with creative prompt engineering, the models were pretty bad at these tasks. However, the experiments were so compelling and truly weird that we decided to push ahead anyway. Last year, right after the first multi-modal models like Sonnet and GPT-4 were available, we did a spike on this type of experience.

Could the models recognize what the user is doing on the canvas?

Could the models create content on the canvas? The seed of the idea was: if a communication channel works for people then it will probably work for AI, too. I like to chat with people. I like to chat with LLMs. I love to whiteboard with people. Maybe I'd like to whiteboard with AIs, too? If you want an LLM to do stuff on the canvas, you need to:

1. get information from the canvas

2. send that info to an LLM and generate instructions

3. execute those instructions on the canvas

The module helps you with steps 1 and 3.

2 is up to you You can learn more and get started at github.com/tldraw/ai. Today we're launching the new tldraw ai module. If you're a developer and want to experiment with LLMs on a whiteboard, this is for you. AI coding agents are here and they're definitely changing the way we write code 🤖

Prisma Postgres is the perfect database for these agents 🤝

Check out how we are solving the database layer for AI coding 👇

pris.ly/ai-agents Starting in 5 minutes, come say hi